Fail Safe, Fail Smart… Succeed! (Part 2 of 3)

This is part two of Chapter 1 from It Depends: Writing on Technology Leadership 2012-2022. Part one was published yesterday. I had to break it up so that your e-mail reader wouldn't truncate it. Part three will be sent out tomorrow.

Each failure allows you to learn many things. Take the time to learn those lessons

Learning from failure

It can be hard to learn the lessons from failure. When you fail, your instinct is to move on, to sweep it under the rug. You don't want to wallow in your mistakes. However, if you move on too quickly, you miss the chance to gather all the lessons, leading to more failure instead of the success you seek.

Lessons from failure: Your process

Sometimes the failure was in your process. The following exchange is fictional, but I've heard something very much like it more than once in my career.

"What happened with this release? Customers are complaining that it is incredibly buggy."

"Well, the test team was working on a different project, so they jumped into this one late. We didn't want to delay the release, so we cut the time for testing short and didn't catch those issues. We had test automation, and it caught some of the issues, but there have been a lot of false positives, so no one was watching the results."

"Did we do a beta test for this release? An employee release?"

"No."

The above conversation indicates a problem with the software development process (and, for this specific example, a culture-of-quality problem). If you've ever had an exchange like the one above, what did you do to solve the underlying issues? If the answer is "not much," you didn't learn enough from the failure, and you likely continued to have similar problems afterward.

Lessons from failure: your team

Sometimes, your team is a significant factor in a failure. I don't mean the group members aren't good at their jobs. Your team may be missing a skillset or have personality conflicts. Trust may be an issue within the team, so people aren't open with each other.

"The app is performing incredibly slowly. What is going on?"

"Well, we inherited this component that uses this data store, and no one on the team understands it. So, we're learning as we do it, and it has become a performance problem."

Suppose the above exchange happened in your team. In that case, you might make sure that the next time you decide to use (or inherit) a technology, you make sure that someone on the team knows it well, even if that means adding someone to the team.

Lessons from failure: your perception of your customers

A vein of failure, and a significant one in the lessons of Clippy, is having an incorrect mental model for your customer.

We all have myths about who our customers are. Why do I call them "myths"? The reason is that you can't precisely read the minds of every one of your customers. At the beginning of a product's life cycle, when there are few customers, you may know each of them well. That condition, hopefully, will not last very long.

How do you build a model of your user? First, you do user research, talk to your customer service team, beta test, and read app reviews and tweets about your product. Next, you read your product forums. Finally, you instrument your app and analyze user behavior.

We have many ways of interacting with subsets of our customers. Those interactions give us the feeling that we know what they want or who they are.

These exchanges provide insights into your customers as an aggregate. They also fuel myths about who our customers are because they are a sampling of the whole. We can't know all our customers, so we create personas in our minds or collectively for our team.

Suppose you have a great user research team and are rigorous in your efforts to understand your customers. You may be able to have in-depth knowledge about your users and their needs for your product. However, that knowledge and understanding will be transitory. Your product continues to evolve and change and hopefully add new users often. Your new customers come to your product because of the unique problems they can solve using it. Those problems differ from existing users—your perception of your customers ages quickly. You are now building for who they were, not who they are.

Lessons from failure: your understanding of your product

You may think you understand your product; after all, you are the one who is building it! However, the product your customers are using may differ from the product you are making.

You build your product to solve a problem. In your effort to solve that problem, you may also solve other problems for your customers that you didn't anticipate. Your customers are delighted that they can solve this problem with your product. In their minds, this was a deliberate choice on your part.

Now you make a change that improves the original problem's solution but breaks the unintended use case. Your customers are angry because you ruined their product!

Lessons from failure: yourself

Failure gives you a chance to learn more about yourself. Is there something you could do differently next time? Did an external factor become obvious in hindsight that could have been detected earlier if you had approached things differently?

Our failures tend to be the hardest to dwell on. Our natural inclination is to find fault externally to console ourselves. Instead, it is worth taking some time to reflect on your performance. You will always find something you can do that will help you the next time.

Collecting the lessons: Project Retrospectives

The best way that I have learned to extract the lessons is to do a project retrospective.

A project retrospective aims to understand what happened in the project from its inception to its conclusion. You want to understand each critical decision, what informed the decision, and its outcome.

In a project retrospective, you are looking for the things that went wrong, the things that went well, and the things that went well but you could do better the next time. The output of the retrospective is neutral. It is not for establishing blame or awarding kudos. Instead, it exists to make sure your team learns. For this reason, it is helpful for both unsuccessful and highly successful projects.

A good practice for creating a great culture around failure is to make it the general custom to have a retrospective at the end of every project in your company. Having retrospectives only for unsuccessful projects perpetuates a blame culture.

The project retrospective repository

Since the project retrospectives are blameless, it is good to share them within your company. Create a project retrospective repository and publicize it.

The repository becomes a precious resource for everyone in your company. It shows what has worked and what has been challenging in your environment. It allows your teams to avoid making the mistakes of the past. We always want to be making new mistakes, not old ones!

The repository is also handy for new employees to teach them how projects work in your company. Finally, it is also a resource for documenting product decisions.

The retrospective repository is a valuable place to capture your products' history and process.

Spotify's failure-safe culture

I learned a lot about creating a failure-safe culture while working at Spotify. Some of the great examples of this culture were:

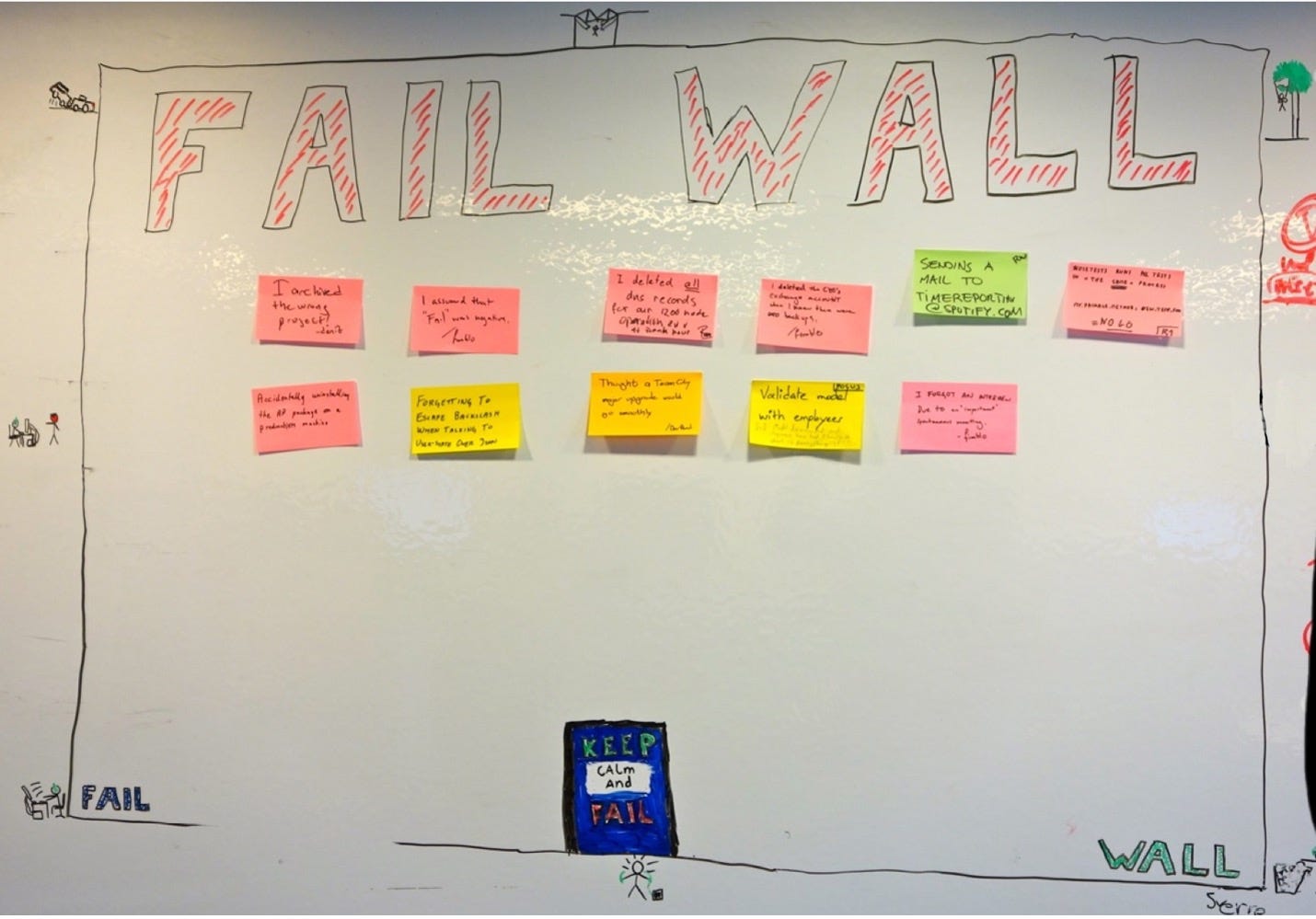

One of the squads created a "Fail Wall" to capture the things they were learning. The squad didn't hide the wall. Instead, it was on a whiteboard facing the hallway where everyone could see it.

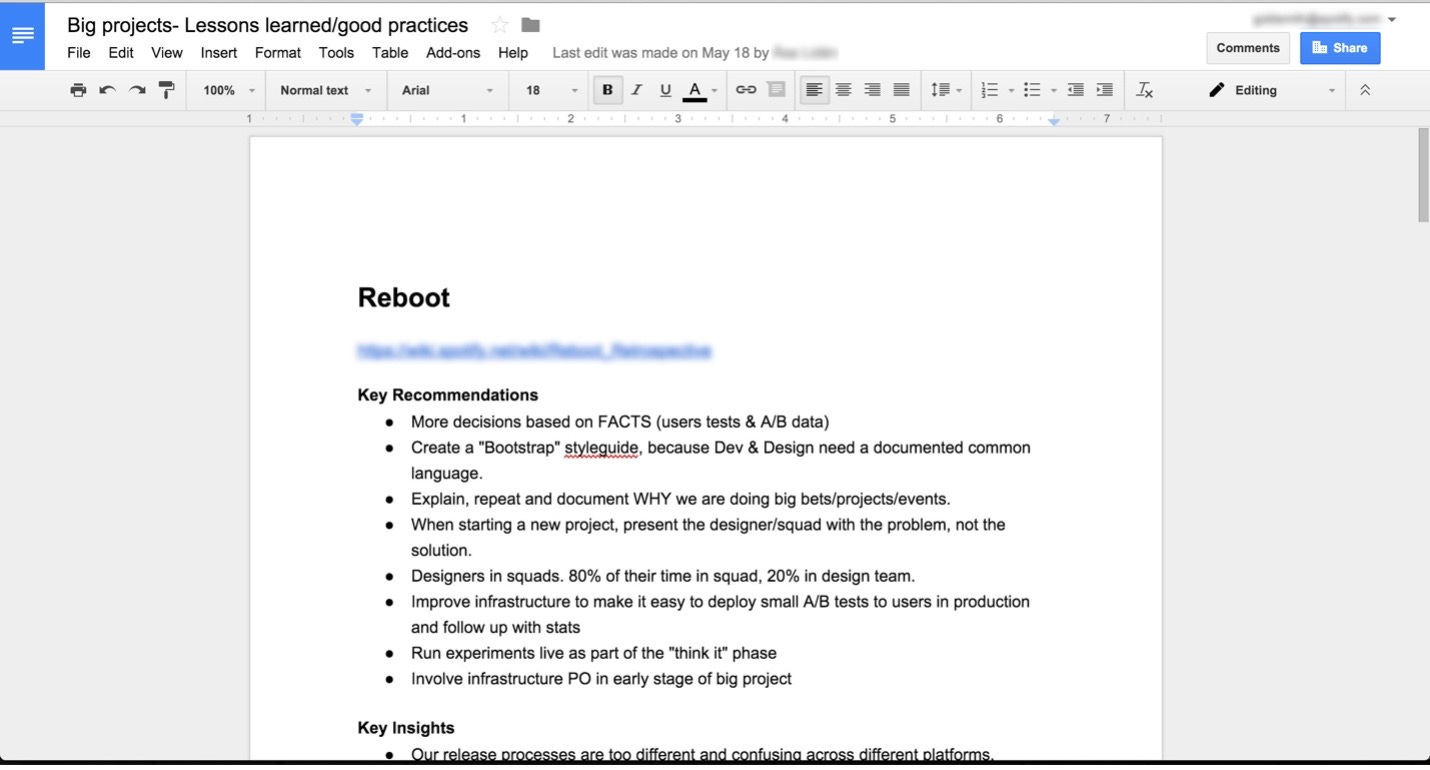

This document is a report from one of the project retrospectives. You don't need any special software for the record. For us, it was just a collection of Google docs in a shared folder.

One of the agile coaches created a Slack channel for teams to share the lessons learned from failures with the whole company.

Spotify's CTO posted an article encouraging everyone to celebrate the lessons they learned from failure. Which inspired other posts like this:

If you look at the Spotify engineering blog, there are probably more posts about mistakes than cool things we did in the years I worked there (2013-2016).

These kinds of posts are also valuable to the community. When you are searching for something, it is because you are having a problem. We might have had the same issue. These posts are also very public expressions of the company culture.

Failure as a competitive advantage

We're all going to fail as we attempt to innovate. If my company can fail smart and fast, learning from our mistakes, while your company ignores the lessons from failure, my company will have a competitive advantage.

Making Failure Safer

How do we reduce the fuel-air bomb failure into an internal combustion failure? How can we fail safely?

Minimizing the cost of failure

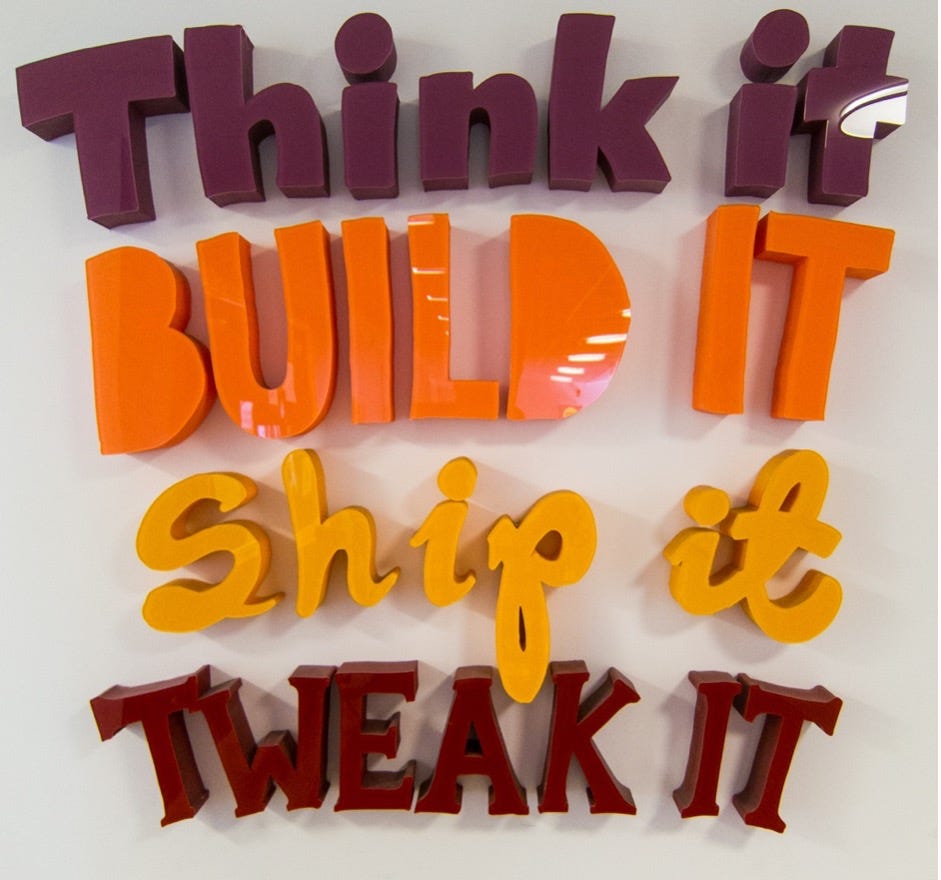

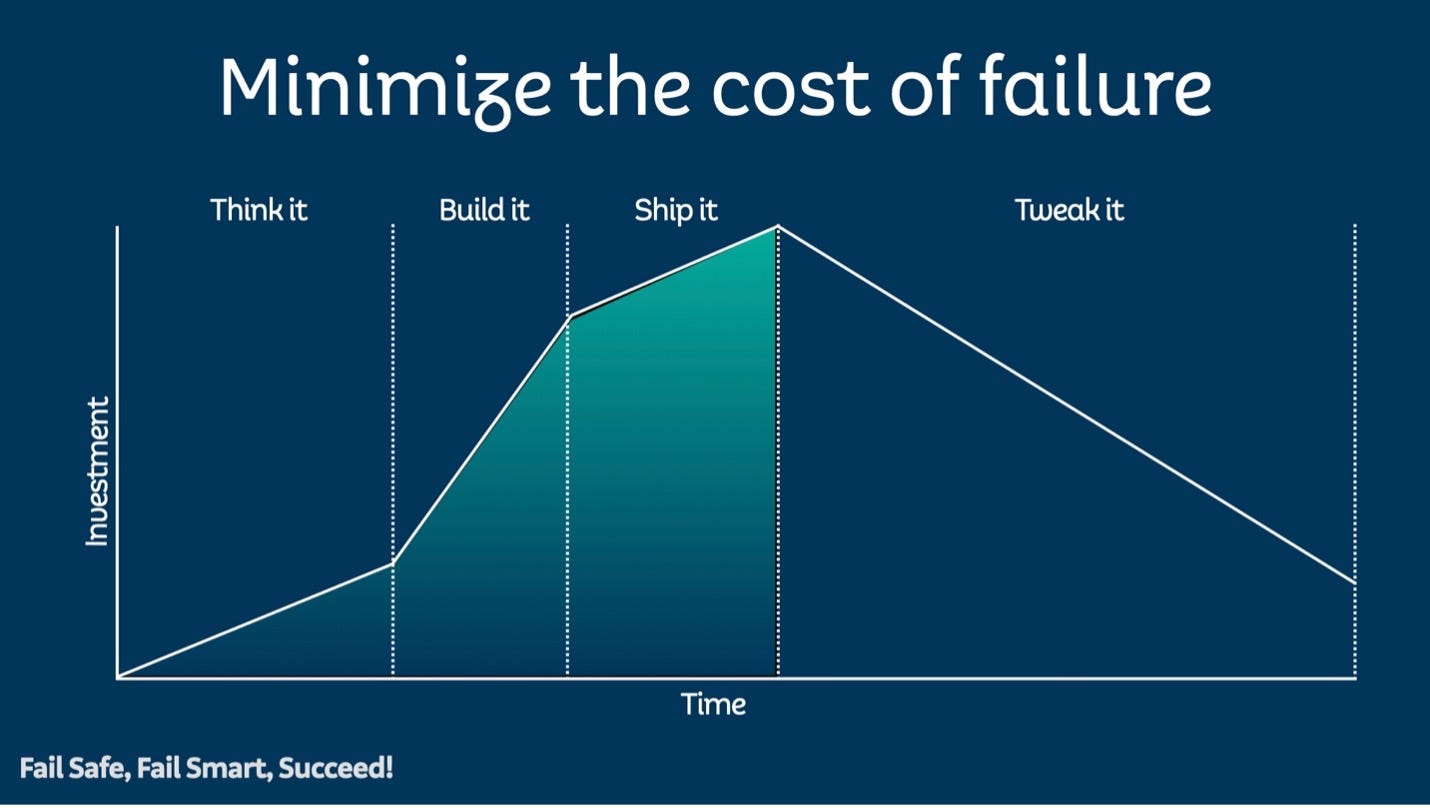

If you fail quickly, you are reducing the cost in time, equipment, and expenses. At Spotify, we used a framework rooted in Lean Startup to reduce the cost of our failures. We named the framework "Think it, Build it, Ship it, Tweak it."

This graph shows investment into a feature over time through the different phases of the framework. Investment here signifies people's time, material costs, equipment, opportunity cost, whichever.

Think It

Imagine this scenario: you are a developer returning from lunch with some people you work with, and you have an idea for a new feature. You discuss it with your product owner, and they like the idea. So, you decide to explore if it would be a valuable feature for the product. You have now entered the "Think It" phase. During this phase, you may work with the Product Owner and potentially a designer. This phase represents a part-time effort by a small subset of the team–a small investment.

You might create paper prototypes to test the idea with the team and customers. You may develop some lightweight code prototypes. You may even ship a very early version of the feature to some users. The goal is to test as quickly and cheaply as possible and gather objective data on the feature's viability.

You build a hypothesis on how the feature can positively impact the product, tied to real product metrics. This hypothesis is what you will validate against at each stage of the framework.

If the early data shows that customers don't need or want the feature, your hypothesis is incorrect. You have two choices. You may iterate and try a different permutation of the concept, staying in the Think It phase and keeping the investment low. You may decide it wasn't as good an idea as you hoped and end the effort before investing further.

If you decide to end during the Think It phase, congratulations! You've saved the company time and money building something unnecessary. Collect the lessons in a retrospective and share them so everyone else can learn.

Build It

The initial tests look promising. The hypothesis isn't validated, but the indicators warrant further investment. You have some direction from your tests for the first version of the feature.

Now is the time to build the feature for real. The investment increases substantially as the rest of the team gets involved.

How can you reduce the cost of failure in the Build It phase? First, you don't build the fully realized conception of the feature. Instead, you develop the smallest version that will validate your initial hypothesis, the MVP (Minimum Viable Product). Your goal is validation with the broader customer set.

The Build It phase is where many companies I speak to get stuck. If you have the complete product vision in your head, finding a minimal representation seems like a weak concept. Folks in love with their ideas have difficulty finding the core element that validates the whole. Suppose the initial data that comes back for the MVP puts the hypothesis into question. In that case, it is easier to question the validity of the MVP than to examine the hypothesis's validity. This issue of MVP is usually the most significant source of contention in the process.

It takes practice to figure out how to formulate a good MVP, but the effort is worth it. Imagine if the Clippy team had been able to ship an MVP. Better early feedback could have saved many person-years and millions of dollars. In my career, I have spent years (literally) building a product without shipping it. Our team's leadership shifted product directions several times without validating or invalidating any of their hypotheses in the market. We learned nothing about the product opportunity, but the development team learned much about refactoring and building modular code.

Even during the Build It phase, there are opportunities to test the hypothesis: early internal releases, beta tests, user tests, and limited A/B tests can all be used to provide direction and information.

Ship It

Your MVP is ready to release to your customers! The validation with the limited release pools and the user testing shows that your hypothesis may be valid–time to ship.

In many companies, if not most, shipping a software release is still a binary thing. No users have it, and now all users have it. This approach robs you of an opportunity to fail cheaply! Your testing in Think It and Build It may have validated your hypothesis. However, it may have also provided incorrect information, or you may have misinterpreted it. On the technical side, whatever you have done to this point will not validate that your software performs correctly at scale.

Instead of shipping instantly to one hundred percent of your users, do a progressive rollout. At Spotify, we had the benefit of a massive scale. This scale allowed us to ship to 1%, 5%, 10%, 25%, 50%, and then 99% of our users (we usually held back 1% of our users as a control group for some time). Due to our size, we could do this rollout relatively quickly while maintaining statistical significance.

If you have a smaller user base, you can still do this with fewer steps and get much of the value.

At each rollout stage, we'd use product analytics to see if we were validating our assumptions. Remember that we always tied the hypothesis back to product metrics. We'd also watch our systems to ensure they were handling the load appropriately and had no other technical issues or bugs arising.

If the analytics showed that we weren't improving the product, we had two decisions again. Should we iterate and try different permutations of the idea, or should we stop and remove the feature?

Usually, if we reached this point, we would iterate, keeping to the same percentage of users. If this feature MVP wasn't adding to the product, it took away from it, so rolling out further would be a bad idea. This rollout process was another way to reduce the cost of failure. It reduced the percentage of users seeing a change that may negatively affect product metrics. Sometimes, iterating and testing with a subset of users would give us the necessary information to move forward with a better version of the MVP. Occasionally, we would realize that the hypothesis was invalid. We would then remove the feature (which is just as hard to do as you imagine, but it was more comfortable with data validating the decision).

If we had removed the feature during the Ship It phase, we would have wasted time and money. However, we still would have squandered less than if we'd released a lousy feature to our entire customer base.

Tweak It

You have now released the MVP for the feature to all your customers. The product metrics validate the hypothesis that it is improving the product. You are now ready for the next and final phase, Tweak It.

The shaded area under this graph shows the investment to get a feature to customers. Until you release the feature to all your customers, you will not earn anything against the investment. Until that point, you are just spending resources. The Think It/Ship It/Build It/Tweak It framework aims to reduce that shaded area and the investment amount before you start seeing a return.

The MVP does not realize the full product vision, and the metrics may be positive but not to the level of your hypothesis. There is a lot more opportunity here!

The result of the Ship It phase represents a new baseline for the product and the feature. The real-world usage data, customer support, reviews, forums, and user research can now inform your next steps.

The Tweak It phase represents a series of smaller Think It/Build It/Ship It/Tweak It efforts. From now, your team iteratively improves the shipped version of the feature and establishes new, better baselines. These efforts will involve less and less of the team over time, and the investment will decrease correspondingly.

When iterating, occasionally, you reach a local maximum. Your tweaks will result in smaller and smaller improvements to the product. Once again, you have two choices: move on to the next feature or look for another substantial opportunity with the current feature.

The difficulty is recognizing that there may be a much bigger opportunity nearby. When you reach this decision point, it can be beneficial to try a big experiment. On the other hand, you may also take a step back and look for an opportunity that might be orthogonal to the original vision but could provide a significant improvement.

You notice in the graph that the investment never reaches zero. This gap reveals the secret, hidden fifth step of the framework.

Maintain It

Even if there is no active development on a feature, it doesn't mean there isn't any investment. The feature takes up space in the product, hogging valuable UI real estate and making it harder to add other new features. The code may be prone to breaking with library or system updates and will be the source of user-reported bugs. Maintaining documentation as the product evolves is also a burden.

The investment cost means it is critical not to add features to a product that do not demonstrably improve it. There is no such thing as a zero-cost feature. Suppose new functionality adds nothing to the product in terms of incremental value to users. In that case, the company must still invest in maintaining it. Features that bring slight improvements to core metrics may not be worth preserving, given the additional complexity they add.

Part 3 of this chapter will go out tomorrow.

Upcoming talk

I’m giving a talk, “The path from Director to CTO: How to follow it, or how to mentor it,” at the LeadingEng conference in New York City in September. You can use the code “DISTROKID” to save 10% on your registration! More information and registration: https://leaddev.com/leadingeng-new-york

Thanks again for reading! If you find it helpful, please share it with your friends.

Buy on Amazon | Buy on Bookshop.org | Buy on Audible | Other stores